Apache Tomcat, developed by the Apache Software Foundation, is an open source Java servlet container that also functions as a web server. While over 10,000 websites rely on Tomcat as a web server, a Plumbr of Java application servers showed that over 60% of websites that use Java technology relied on Apache Tomcat to host the business logic.

Production environments must be high performing. This requires that Apache Tomcat be configured to handle the maximum load possible and yet provide the best response time to users. The performance that an application server delivers is often dependent on how well it is configured. Often the default settings provided are non-optimal.

At eG Innovations, our eG Enterprise IT performance monitoring solution uses Apache Tomcat as the web server. Over the years, we have discovered several tips and tricks for configuring Tomcat to achieve the highest level of scalability possible. This blog post documents our learnings on best practices that you should employ when deploying Tomcat in production.

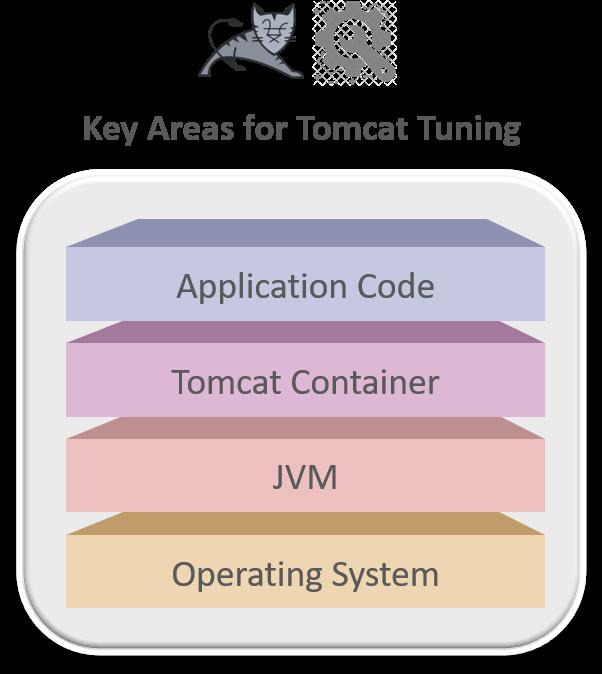

A first step to achieving high performance is to recognize that tuning the Tomcat application server alone is not sufficient. After all, Tomcat runs on top of a Java virtual machine (JVM). So, a poorly configured JVM will compromise performance. Likewise, the JVM runs on an operating system and it is important to have the best possible operating system configuration to achieve the highest performance possible. Even bottlenecks in the application code can result in “Tomcat is slow” complaints. All in all, a holistic approach must be taken for Tomcat performance tuning.

Performance tuning must be done at every layer: the operating system, JVM, Tomcat container, and at the application code level

In the following sections, we will present best practices to configure the operating system, JVM, Tomcat container, and application code for best possible performance.

Since Tomcat uses a JVM, the performance of the JVM impacts Tomcat’s performance as well.

Before you start adjusting any settings, you should make sure that you have chosen a modern JVM for your application. There are many benchmarks that indicate 5-20% performance gains from each version of Java (refer to this article for more details).Many JVMs are available in 32-bit and 64-bit modes. While the 32-bit mode is limited to 2GB of memory, 64-bit JVMs allow for the Java heap to be set much higher. Hence, make sure you are using a 64-bit JVM for best performance and highest scalability.Garbage collection is the process by which Java programs perform automatic memory management. In the past, garbage collection was done in a stop-the-world manner. That is, when garbage collection happened, the application was paused in order to reclaim memory. Today, there are many garbage collection implementations where the garbage collection happens in parallel with the application execution.

For best performance, make sure that you choose a modern garbage collector like G1GC (Garbage First Garbage Collector) or the Z Garbage Collector.The MaxGCPauseMillis setting for the JVM can be used to set the peak pause time expected in the environment. It is recommended that this value be between 500-2000ms for best performance. While longer pauses increase throughput, shorter pauses reduce the latency and the throughput. In addition to the different GC settings, monitor the Tomcat server in production and make sure that the percentage of time that the JVM spends on garbage collection is low. Any value over 5% will be detrimental to Tomcat’s performance.Memory availability in the JVM can also adversely impact Tomcat performance.

You have to make sure that sufficient memory is available in all the heap and non-heap memory pools. If any of the memory pools is running out of available memory, you will encounter OutOfMemory exceptions and the application can fail in unexpected ways. Use the Xmx and Xms flags of the JVM to set the maximum heap size and stack size, and the XX flags to set the PermSize or MetaspaceSize depending on the version of the JRE being used (read this article for additional reference).Make sure that the memory available to each of the memory pools of the JVM is sufficient. Memory shortage will adversely affect Tomcat server performance.

Setting the memory limits is often a trial and error process. Setting the memory limits to be too low can result in exceptions, while setting them to be too high can result in waste of resources. Use a JVM monitoring tool and analyze performance during a week or more, considering peak hours as well to determine optimal values of the JVM memory pools.If memory grows unbounded in the JVM, you will need to determine if there is a memory leak in the application.

Take a memory heap dump using Java built-in tools such as JvisualVM and Jconsole. Use tools such as the Eclipse Memory Analyzer (MAT) to determine memory leak suspects. The Dominator Tree of MAT will help you narrow down with threads/objects are causing the memory leak.Finally, track thread activity in the JVM.

Whereas total threads in the JVM must be tracked to discover any thread leaks, it is important to track thread blocks and deadlocks as they are detrimental to performance. Stack trace of blocking threads and deadlocked threads can reveal application code-level issues.Identifying thread blocks and deadlocks causing application hangs

In addition, monitor the CPU usage of individual threads to detect run-away threads that are taking CPU, slowing down Tomcat. Again, any JVM level monitoring tool can provide these insights.In some cases, background threads of an application may be taking excessive resources. By monitoring thread activity, you can determine such scenarios and even determine the line of code/method that is causing the problem.As is the case with the JVM, many scalability and security enhancements have been made with the latest releases. Hence, make sure that you are using the latest version of Tomcat. Currently, Tomcat 9 is the latest version.

Tomcat’s server.xml configuration file includes several elements that can be tweaked to enhance the performance of Tomcat.

Configuring the Connectors

These are elements that enable Tomcat to receive requests from clients. One instance of a connector listens for requests on a specific TCP port number on a server.

If there are different types of workloads coming into your Tomcat server, you should consider having multiple connectors – so one type of traffic is processed on one port and a second type on another port. Doing so reduces the changes that the different types of workloads may interfere with one another.

Each incoming request is processed by a thread in Tomcat. The maxThreads attribute of a connector defines the maximum number of simultaneous threads that can be executing for a connector. The number of simultaneous threads executing depends on the hardware and the number of CPUs it has. The better the hardware and higher the number of processors, the greater the concurrency that Tomcat will need to support.

If the maxThreads attribute is set too low, requests will need to wait until a thread becomes available to process the request. This can increase response times seen by users. Hence, for best performance, set maxThreads to a high enough value that threads are always available in Tomcat to process incoming requests.Attributes of a Tomcat connector

From a monitoring standpoint, it is important to monitor the number of threads active in each connector’s thread pool. If the number of active threads is close to the maxThreads limit, you should consider tuning the Tomcat server configuration to allow for a larger thread pool for the connector.

The choice of the connector protocol to handle incoming requests also affects the Tomcat server’s throughput. For example, Tomcat 9 supports blocking and non-blocking connectors. See this comparison chart.

With a blocking connector where each worker thread is consumed until its associated connection is complete. However, a non-blocking connector leads to better thread management with longer running requests. Performance tests suggest that the non-blocking connector provides better performance with longer running requests.

Consider using non-blocking connectors as this delivers greater performance.The enableLookups setting for a connector determines if the Tomcat server performs a DNS reverse lookup to find the hostname of each remote client.

DNS lookups are expensive and if this value is set to true, a slowdown of the DNS service can make it appear as if Tomcat is slow. Hence, for best performance, turn enableLookups to false for all the connectors in use.Another important connector setting is the acceptCount. This is the max length of the accept queue where requests are placed while waiting for a processing thread. When the accept queue is full, additional incoming requests will be refused. The default value of 100 is inadequate for typical production workloads.

Hence, for best performance, set the acceptCount needs to be large enough to accommodate the burst of incoming connections that the server can receive. If the acceptCount is too low, clients will see “connection refused” errors. If the value is too high, the queue will take up additional server memory.When using the NIO and NIO2 connectors, you can configure the size of the socket read buffers and write buffers used by Tomcat. The socket.rxBufSize and socket.txBufSize attributes govern the buffer size setting.

Larger the value of socket.rxBufSize and socket.txBufSize, higher the throughput supported. Consider settings of 64KB or higher for these values.Often, you may have clients connecting over WAN links to the Tomcat server. The compression attribute controls whether Tomcat compresses content when sending it to clients.

Set this attribute to “on” for best performance. GZIP compression is used then.The content types that should be compressed is provided in the compressibleMimeType.Any communication between the client and server that is primarily text, be it HTML, XML or simply Unicode, can regularly be compressed up to 90% using a simple and standard GZIP algorithm. This can have a massive impact on reducing network traffic, allowing responses to be sent back to the client much faster, while at the same time allowing for more network bandwidth to be available for other network heavy applications.

Using Executors

When using connectors, a pool of threads is dedicated to each connector. If you are using multiple connectors, you can configure an executor. An executor is a common thread pool that can be shared by multiple connectors. Not only does this allow better sharing of threads across connectors, but it also provides a mechanism to lower the number of threads in the pool should the incoming workload not require these threads for processing. When using a thread pool per connector, Tomcat does not reclaim threads in the pool, so if you see a large spurt of requests once, this can increase the number of threads in the pool for the entire lifetime of the Tomcat server. When using an executor, the maxThreads setting is defined at the executor level. In such a case, Tomcat administrators need to monitor the thread pool activity and usage at the executor level, not at the connector level.

Configuring Public SSL Certificates

If your connector is SSL enabled, make sure that you have configured it with a valid SSL public certificate.Check this blog to understand the performance impact that improper SSL certificate configuration can have on Tomcat performance.

Tune Resource Caching Settings

To improve performance, Tomcat is configured by default to cache static resources. However, the size of the cache must be configured to be large enough to provide performance savings.

To tune Tomcat’s cache settings, find the Context directive (in server.xml or context.xml), and set the cacheMaxSize attribute to the appropriate value.Database Connection Pooling

Connection opens to the database are expensive. Connection pooling is a common technique for optimizing database accesses by having a pool of open connections so that requests can take connections from the pool and return connections back to the pool once they are done with their tasks. By not having to create and tear down connections for each request, connection pooling allows application response to be faster and reduces the overhead of connection handling on the database server. Tomcat has built-in support for database connection pooling.

As in the case of thread pools, ensure that the database connection pool’s maxActive setting, which defines if the maximum number of active connections in the pool is large enough to accommodate the workload it is handling.Monitor the database connection pool usage because if the connection pool is fully utilized, new requests will wait for free connections to be available, causing response times to go up.Web.xml Optimizations

The server.xml file is used to specify server-specific configurations. There is only one server.xml for each Tomcat server instance. The web.xml file is used to specify the web application specific configurations. There is one web.xml file for each web application deployed on the Tomcat server. The default settings inherited by all web applications are defined by a web.xml file in the main Tomcat configuration directory.

The default property values in this file are tuned for development environments and need to be modified for production deployments. The Java Server Pages (JSP) compiler setting has a development mode setting. This is true by default. Change this to false to avoid checking JSPs often to see if recompilation is necessary.Precompile JSPs to avoid compilation overhead on production servers.Likewise, set genStringAsCharArray to “true” to produce more efficient char arrays.Set trimSpaces to “true” to remove unnecessary bytes from the response.Inefficient application code can also cause the application deployed on Tomcat to be slow. Logging is a common way to track an application’s operation. However, logging to output files is a synchronized operation and excessive logging can actually slow down application performance.

Employ transaction tracing techniques that are based on byte-code instrumentation to monitor application processing without needing any changes to the application code. These techniques rely on a specially crafted jar file that utilizes the instrumentation API that the JVM provides to alter existing byte-code that is loaded in a JVM.

IT teams and developers can use this capability to drill down into slow transactions and proactively detect performance problems before they impact end-users.

Distributed transaction tracing used to identify code-level issues in web applications powered by Tomcat

You may often hear that application servers based on Java, such as Tomcat, are slow or not production ready. In this blog, we have provided a range of best practice configurations to get the most out of your Tomcat web application server. Our eG Enterprise application performance monitoring solution uses Apache Tomcat as the core container engine and has been widely deployed to support monitoring of tens of thousands of servers, tens of millions of real-time metrics, and over a hundred thousand end users in production. So, you can be sure that the best practices we have provided here actually work!

PREV: What is a server maintenance plan and why is it important ...